For those wishing to learn from our experiences, here is a list of lessons learned for the SeeMore Project. These are a mix of lessons from the CS Team led by Prof. Kirk Cameron and the Sculpture Team led by Prof. Sam Blanchard.

1. Passion for the project is essential. SeeMore was an idea dreamed up in moments of inspiration with only a vague idea of the toil and perspiration that would be needed to see it through. The project was in high-gear and required much attention from April of 2013 through late summer 2017 when SeeMore moved to a semi-permanent home in Torgersen Hall on the Virginia Tech campus. SeeMore is still operational today and requires some upkeep from time to time. Over that length of time, funding would ebb and flow and students would come and go. Without the steadfast dedication of Kirk and Sam to shepherding SeeMore from concept to reality and thereafter, the project would have failed. At times, only a passion for the work sustained the project. If either of the PI’s had walked away at any point, the SeeMore project would likely have failed. Passion is also contagious and dozens of students contributed substantially to the project over the years and their energy and dedication was exciting to behold.

2. Collaboration is key in any project where each team relies on the other team for success. This was especially true across the fields of computer science and sculpture. Communication must be frequent, open, and honest. While professional courtesy wins out most of the time, it helps to have a team that can push through adversity, stress, and disagreements. With SeeMore, both team leads had strong passion and determination to see the project to completion. We ran out of money several times and we ran out of patience with each other a few times as well. But with two leads that understood the importance of checking emotions during stressful times, in the end, we were all willing to go the extra mile and work long hours to get the job done.

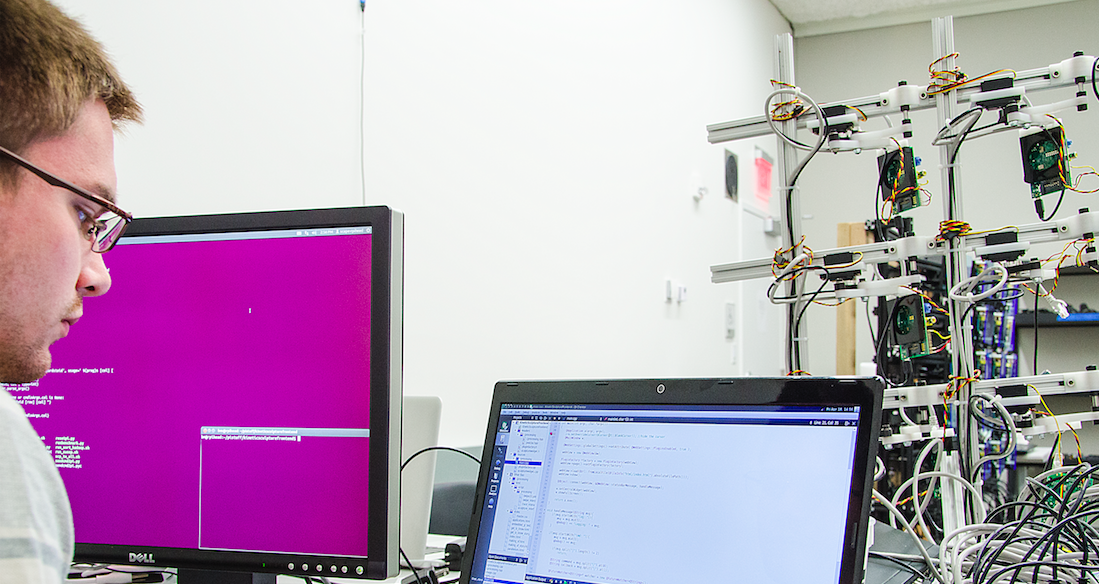

3. Cultural barriers are real and represent some of the biggest challenges. For example, from the art side, Sam and his team were always looking to optimize the physical infrastructure from wiring length and power strip locations to the latest hardware components. On the CS side, when developing one-of-a-kind, prototype software, testing is a significant time and effort sink. Catastrophic software bugs (e.g., faulty servo drivers, incomplete or non-working interface) or hardware issues (e.g., disk failure) can kill an entire exhibit. Changing components, such as swapping an old Raspberry Pi for a newer model, are at times terrifying since any element in the software stack may fail and must be retested. A late swap to the Raspberry Pi model B completely took down SeeMore just before packing for the first exhibit in Vancouver. In fact, after more than a week of fixes including a major servo driver rewrite and install, the first successful full test of SeeMore with the new hardware took place after final assembly on the exhibit floor in Vancouver. The stress on the teams was significant. This problem was mostly unavoidable since we had no idea that the older models would become unavailable in volume when we needed them. But, the stress on the team was palpable and it could have gone very wrong if our team hadn’t come together in time.

4. Organization and Adaptability were also critical to our success. Some challenges were nefarious and persistent. Consider for example transporting a 1-ton object 9-feet tall and 8-feet wide through a standard 32-inch door frame. This took extensive planning at design time as well as adapting to venues as varied as 30-inch wide doors and 8.5-feet ceilings. The same object had to fit in a single 13-foot moving van with two people in order to economically ship SeeMore nearly 3,000 miles from Blacksburg, Virginia to Vancouver, B.C. And the 1-ton weight could exceed the weight capacity of the shipping van or an exhibit location. Sam was able to leverage his extensive experience shipping artwork world wide to ensure SeeMore made it to every venue intact and operational with limited wear and tear. This included seeing the cluster through customs from the U.S. to Canada and back. As another example, during the span of the project from 2013-2017, 13 different versions of the Raspberry Pi computer were released. This limits the number of replacement parts available for any version of RaspberryPi being used at any moment. As mentioned, just one small change in the system (e.g, now runs hotter, uses more memory, has different ports in new locations) affects everything from power consumption, memory cards, and wire length to the amount of torque placed on the servos. Each of these issues occurred at some point during the project creating the need to repurchase hardware, upgrade memory capacity, increase wire length and weight, manually reflash 256 memory cards, and change wire adapters. These changes also affected the reliability of the servos and the Raspberry Pi computers. Needless to say, adaptation became the norm for both teams and excessive documentation was essential since personnel changes were frequent due to graduations, internships, etc. and some of the solutions (e.g., how to pack the 13-foot van, how to refresh a RPi image) would have been impossible to repeat without some written record of the methods used.

5. Scale was a problem for both teams. Whenever you rely on 256 entities working in synchronized harmony, the room for error shrinks. Scale was difficult for the CS team since we needed to keep track of what each of the 256 devices were doing and we had to ensure they all operated in a synchronous fashion accounting for electronic delays, servo torque, and overheating at scale. The software also had to be resilient enough to handle what would become common node failures every hour or so of operation. But, scale was probably tougher on the Sculpture Team that had to assemble and reassemble thousands and thousands of parts at every exhibit. This process took a team of 3-5 people about 1.5 days. The CS Team helped as much as possible and the Sculpture Team was always optimizing as best they could, but this was a serious limitation and a significant expenditure. Shipping SeeMore for an exhibit costs about $15-25k mostly due to the labor costs of assembly.

6. Story telling and funding were essential to SeeMore’s success. The best projects begin with an idea and a story that describes how the proposed work will help the community at large. SeeMore’s story was always one that hinged on using art and movement as a computer science education tool. This story resonated with all our investors. The first investment came from PI Cameron using his own university funds to purchase prototype systems for the teams to experiment with and to gauge the ultimate budget needs. Once the scope was clear, both Kirk and Sam sought additional funding first from university-level institute seed resources (about $25k). The university sought to invest in promising collaborations across seemingly disparate areas (e.g., computer science and sculpture). Thereafter, we sought funding from the National Science Foundation. The NSF has a standing mission to support innovation in computer science education. At that time, we also reached out to a colleague in Engineering Education to help us with surveying the exhibit participants and writing up our findings in top education venues later. Sam was also tenacious in seeking in-kind donations to supplement capital expenditures on Raspberry Pi’s (RPi Foundation) and servo mechanisms (Hitek Multiplex). Sam was also able to leverage volume purchases and buy items in bulk to solicit discounts from vendors.

7. Consider the audience and venue. Lil’ SeeMore consisted of a wall-mounted 5 x 6 grid of moving Raspberry Pi’s and could be displayed on a wall in a small gallery for viewing by 5-10 people at a time. SeeMore consisted of a ten-feet tall, 1-ton, cylindrical frame with a circular footprint diameter of about 9 feet (6 feet for the artifact and 2-3 feet clearance). For the smaller display, we designed an interactive kiosk that walked people through a demonstration of parallel computing. We learned at the first venue that interactivity slowed the throughput of the audience significantly. Thereafter, all demos were limited to under 2 minutes and became non-interactive on a large, elevated monitor to grow the capacity and keep the audience moving. The final SeeMore demo allowed a live speaker to narrate the demo or users to simply watch and learn themselves. This enabled hundreds of visitors per hour and ultimately tens of thousands of visitors to the SeeMore exhibit at several venues.

8. Upkeep is significant. As mentioned, SeeMore has been on display at various venues since 2014. When used extensively (8-10 hours a day for 3-5 days in succession), the wear and tear on SeeMore’s electronic parts are significant. At one point, we estimated losing about 10-30 Raspberry Pi nodes per day (either via servos overheating or RPi failure). Some of these were soft errors that would clear on reboot or cool down. However, this required bringing about 75-100 replacement parts on every trip to keep the system running. Over SeeMore’s lifetime, every Rasperry Pi and every servo has been replaced at least once, probably twice. At this point, when we are asked to exhibit SeeMore on location, we budget part replacements ($3-5k) to ensure SeeMore remains operational.

The Unknown Unknowns. In closing, beware the significant barriers, exceptions, errors, and other issues that you cannot foresee. The key here is to be agile and ready to pivot as needed to see the project to success. For example, while prototyping and scaling the number of nodes to 30, we kept having seemingly random reboots, memory corruptions, and hardware failures. Servos were burning out with higher than expected frequency as well. After weeks of testing we were flummoxed. At an all-hands meeting to discuss the problem, Kirk remembered an incident from his time as a sysadmin before grad school. He had a network of computers where devices (disks, network cards, motherboards) would fail somewhat randomly. He determined that power spikes in large buildings go unnoticed by most equipment (e.g., copiers, lights) but sometimes overload can destroy the circuits of sensitive devices (e.g., computers). Kirk speculated this was happening in SeeMore. After installing power conditioners (e.g., UPS’s) between SeeMore and the building power most of the issues with the Raspberry Pi’s disappeared. We later determined the servos were suffering from overuse due to torque from the attached wiring. Sam was able to design a lower torque version of the reticulating arms that helped tremendously. Some problems are inherent and unavoidable but our agility (and tenacity + passion) enabled us to solve problems like these and complete the project despite many unknown unknowns.